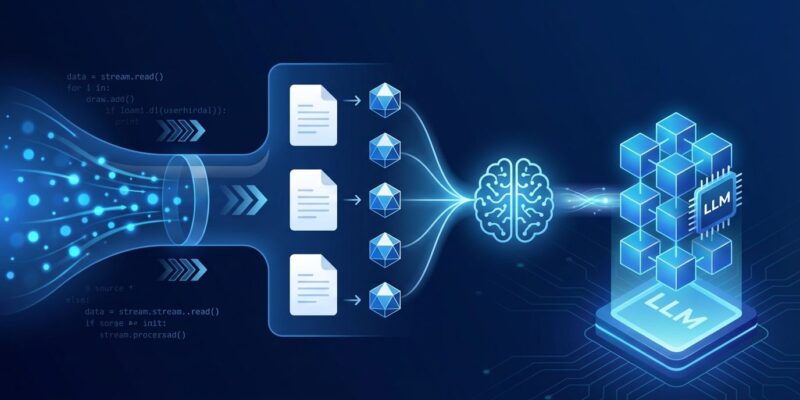

Your company’s documentation was updated two hours ago with critical API changes. A developer asks your RAG-powered chatbot about the new endpoint. It confidently returns information from yesterday’s version—completely wrong. Here’s the dirty secret most RAG tutorials won’t tell you: they use static snapshots. Your carefully indexed PDFs are stale the moment someone updates a doc. Pathway, a Python framework that gained 976 stars on GitHub this week, solves this with streaming RAG pipelines that update the instant your data changes.

The Batch RAG Problem Nobody Talks About

Traditional RAG implementations run batch jobs. You index your documents, build a vector database, and hope nothing changes before the next scheduled update. In demos with static PDFs, this works fine. In production with live documentation, customer support databases, or financial feeds, it’s a disaster.

The workarounds are all painful. Manual re-indexing doesn’t scale. Cron jobs leave gaps of hours or days. Real-time solutions require stitching together Kafka for streaming, Airflow for orchestration, and Pinecone for vector storage. What should be a straightforward RAG pipeline becomes a distributed systems nightmare.

How Pathway Unifies Real-Time RAG

Pathway is a Python ETL framework built for streaming data and LLM pipelines. You write Python using familiar syntax, but your code runs on a multi-threaded Rust engine. No Python GIL limitations, no JVM overhead. The framework handles 60,000 messages per second with sub-second latency.

The killer feature is unified batch and streaming processing. The same code that indexes historical data can process live streams. When a document changes, Pathway detects it, re-parses the content, updates embeddings, and refreshes the vector index—automatically. No manual triggers, no batch windows, no stale answers.

Under the hood, Pathway uses Differential Dataflow for incremental computation. Instead of recomputing everything when one document changes, it processes only what’s different. This makes real-time updates fast enough for production.

Building a Real-Time RAG Pipeline

Let’s build a working pipeline that demonstrates the real-time advantage. Start with installation:

pip install pathway[xpack-llm] python-dotenvSet up document indexing. Pathway’s LLM extension includes parsers for PDFs and text files, token splitters for chunking, OpenAI integration for embeddings, and an in-memory vector store.

import pathway as pw

from pathway.xpacks.llm.document_store import DocumentStore

from pathway.xpacks.llm.embedders import OpenAIEmbedder

from pathway.xpacks.llm.parsers import UnstructuredParser

from pathway.xpacks.llm.splitters import TokenCountSplitter

# Load documents - watches directory for changes

documents = pw.io.fs.read("./data/", format="binary", with_metadata=True)

# Configure processing components

embedder = OpenAIEmbedder(api_key=os.environ["OPENAI_API_KEY"])

parser = UnstructuredParser(chunking_mode="by_title")

splitter = TokenCountSplitter(min_tokens=100, max_tokens=500)

# Create document store with real-time indexing

document_store = DocumentStore(

docs=documents,

retriever_factory=BruteForceKnnFactory(embedder=embedder),

parser=parser,

splitter=splitter

)The pw.io.fs.read call monitors the directory for changes. Add a document, update one, or delete it—Pathway reacts immediately.

Wire up query handling and LLM integration:

# Set up REST API endpoint

webserver = pw.io.http.PathwayWebserver(host="0.0.0.0", port=8011)

queries, writer = pw.io.http.rest_connector(webserver=webserver, schema=QuerySchema)

# Retrieve relevant documents via vector search

retrieved_documents = document_store.retrieve_query(queries)

# Build prompts and generate responses

model = llms.OpenAIChat(model="gpt-4o-mini", api_key=os.environ["OPENAI_API_KEY"])

response = prompts.select(result=model(llms.prompt_chat_single_qa(pw.this.prompts)))

writer(response)

# Run the pipeline

pw.run()Test it with a curl command:

curl --data '{"messages": "What is Pathway?"}' http://localhost:8011Now add a new document to your ./data/ folder and query again immediately. The response reflects the new content instantly. No batch job, no manual reindex, no delay. The official Pathway tutorial walks through advanced scenarios including multi-source ingestion and metadata filtering.

Traditional RAG vs Real-Time RAG

Batch RAG: Manual triggers or cron jobs for re-indexing. Data is stale between runs—hours or days behind. You need separate tools for streaming (Kafka), orchestration (Airflow), and vector storage (Pinecone). Complex to deploy and maintain.

Pathway RAG: Automatic updates on data changes. Always-current answers with instant indexing. All-in-one framework combining ETL, vector store, and LLM integration. Simpler architecture, easier deployment.

Performance matters too. Pathway’s public benchmarks show up to 90x speedups over competitors in WordCount tests, with sustained throughput beating Flink and Spark Streaming in latency-critical scenarios.

When to Use Pathway

Choose Pathway when your data changes frequently and freshness is critical. Customer support knowledge bases that update with new policies. Documentation sites where engineers commit changes daily. Financial data feeds where minutes-old information is worthless.

It’s production-ready out of the box. Docker and Kubernetes deployment is straightforward. Enterprise connectors for SharePoint, Google Drive, Kafka, PostgreSQL, and S3 are built-in. NATO and Intel use Pathway in production.

Pathway plays well with other frameworks. Use it for real-time indexing and vector storage, then integrate with LangChain for agent orchestration or LlamaIndex for advanced retrieval strategies. The llm-app repository has ready-to-run templates for common deployment patterns.

Batch RAG works for demos. Real-time RAG works for production. If your data is live and freshness matters, Pathway is built for exactly that problem.