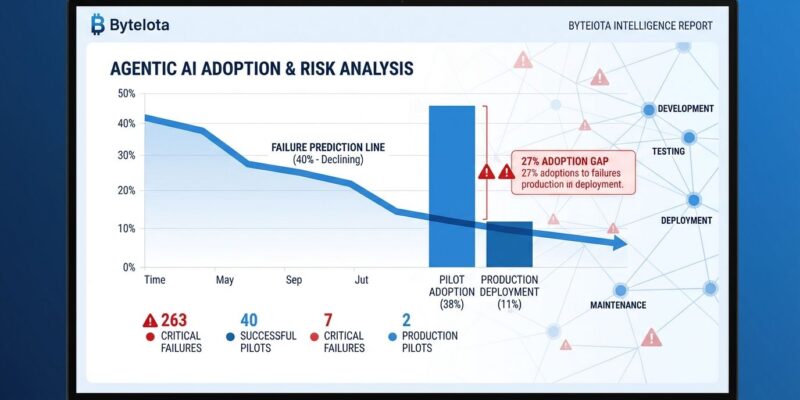

Gartner dropped a forecast in June 2025 that should terrify every CTO rushing into agentic AI: over 40% of projects will be canceled by the end of 2027. Not because the technology doesn’t work—Walmart’s AI agent delivered a 22% e-commerce sales increase, and banks are seeing 200-2,000% productivity gains in KYC/AML workflows. The problem is simpler and more embarrassing: organizations are automating flawed processes, falling for “agent washing” from vendors, and launching hype-driven pilots without clear business value. Meanwhile, a massive 27-point gap exists between organizations piloting agentic AI (38%) and those actually running it in production (11%). The technology works. Most organizations just aren’t ready to use it.

The Three Horsemen of Agentic AI Failure

Gartner’s analysis identifies three failure modes, and none of them are about the AI being broken. First, hype-driven implementation: “Most agentic AI projects right now are early stage experiments or proof of concepts that are mostly driven by hype and are often misapplied,” says Anushree Verma, Senior Director Analyst at Gartner. These are POCs launched because executives read a headline, not because anyone identified a clear business case. When 42% of organizations are still developing their AI strategy and 35% have no strategy at all, it’s not surprising that projects collapse under their own weight.

Second, the “agent washing” epidemic. Here’s the dirty secret: Gartner estimates only about 130 of the thousands of vendors claiming to sell agentic AI are legitimate. The rest are rebranding chatbots, RPA tools, and AI assistants as “autonomous agents” without adding actual autonomy or decision-making capabilities. It’s the AI equivalent of slapping “organic” on a candy bar and charging triple. When buyers lack the technical depth to differentiate real agentic systems from glorified if-then scripts, vendors have every incentive to exaggerate capabilities.

Third, and most damning: organizations are automating broken processes. Gartner predicts 40% of projects will fail “not due to technology limitations but because organizations are automating flawed processes.” This is the critical insight everyone misses. If your expense approval workflow is a bureaucratic nightmare of seven manual steps, three email chains, and a spreadsheet someone updates on Fridays, giving it to an AI agent doesn’t fix it—it just automates the nightmare. Fix the workflow first, then automate it. Most organizations do the opposite and wonder why their $2 million AI investment didn’t deliver magic.

The Pilot-to-Production Death Valley

The numbers expose a widening gap between experimentation and execution. In Q4 2024, 37% of enterprises had agentic AI pilots. By Q1 2025, that nearly doubled to 65%. Production deployment? Stuck at 11%. That’s a 27-point gap—what practitioners call the “pilot-to-production death valley.” Hype is driving pilot launches at an accelerating rate, but nobody’s actually getting these systems into production.

Why? “Technology performs well in a sandbox,” notes Deloitte, “but integration tasks like authentication, compliance workflows, and user adoption are pushed aside until executives ask for a production timeline, and by then, the pilot feels too fragile to scale.” Organizations treat pilots as science experiments instead of building for production from day one. They skip the hard work—legacy system integration (40% cite as barrier), data privacy and compliance (53% cite as top concern), change management across teams. When it’s time to deploy, the fragile POC can’t handle real-world complexity.

The organizational barriers are even more telling. Three of four companies cite resistance to change as the single hardest obstacle. This isn’t a technology problem—it’s a people problem. Developers don’t trust black-box systems making decisions they’ll be blamed for. Operations teams fear accountability gaps when agents screw up. Legal wants audit trails for autonomous actions. And nobody wants to be the one who approved the AI that deleted the production database at 2 AM on a Friday. Until organizations solve trust, accountability, and governance, pilots will stay pilots.

How to Identify “Agent Washing” Before You Buy

With only 130 legitimate vendors out of thousands making claims, buyers need a bullshit detector. Start with four questions that expose rebranded RPA tools. Does it take initiative? Real agents don’t wait for commands—they act based on context and goals. If your “agent” only responds to user input, it’s a chatbot with a marketing budget. Can it handle multi-step workflows? Single-step command-following is automation, not agency. Ask vendors to demonstrate complex, multi-step processes that require decision-making at each stage.

Does it learn and improve? Static rule-based systems are not agentic. True autonomous agents get better with experience—learning from outcomes, adapting workflows, improving decision quality over time. If the vendor can’t explain how the system learns or show improvement metrics, it’s agent washing. What about integration? Real agentic AI orchestrates agents across every relevant system—ERP, CRM, HRIS, ITSM. If it only works inside its own silo or is exclusively chat-based, you’re buying a confined tool, not an autonomous agent.

Watch for red flags. Vague definitions filled with buzzwords but no technical substance. Grandiose claims like “fully autonomous with human-level decision making”—no current agentic AI operates without oversight in enterprise contexts. Repackaged old tools: check the vendor’s product changelog for fundamental architecture changes, not just UI refreshes and feature additions. And critically, no emphasis on governance. Legitimate agentic AI vendors obsess over boundaries, oversight, and observability because autonomous systems require control frameworks. Vendors who skip governance know their product isn’t actually autonomous.

The 60% That Succeed: What They Do Differently

The technology works when organizations get implementation right. Walmart’s AI Super Agent ingests real-time POS data, supply chain inputs, web traffic, weather, and local trends to autonomously forecast demand per SKU per store and initiate just-in-time restocking. Result: 22% e-commerce sales increase in pilot regions by improving availability of top-searched products. Ramp launched its AI finance agent in July 2025 to read company policy documents, audit expenses autonomously, flag violations automatically, and generate reimbursement approvals—reducing manual expense review workload by 60%. A U.K. utility company deployed agentic AI to meet regulatory requirements for contacting customers with special needs during outages, achieving compliance that conventional technologies couldn’t deliver.

McKinsey reports that banks deploying agentic AI for KYC/AML workflows see productivity gains of 200% to 2,000%—yes, two thousand percent in some cases. Companies using agentic AI achieve up to 30% reduction in operational costs and up to 50% faster processing times in enterprise workflows. The 60% of projects that succeed aren’t building better AI—they’re executing smarter implementations.

What separates winners from the 40% headed for cancellation? They fix processes before automating them, understanding that AI amplifies workflows, it doesn’t repair them. They start with clear ROI metrics and business cases, not hype-driven POCs. They assign product managers to AI services, define SLAs and SLOs, and build integration tasks—authentication, compliance, user adoption—into the pilot phase instead of treating them as afterthoughts. They emphasize governance upfront: boundaries for autonomous actions, oversight mechanisms, observability frameworks. And they recognize that AI adoption is fundamentally an organizational challenge, not a technical one, investing in change management and building trust before demanding production deployment.

Key Takeaways

- The 40% agentic AI failure rate isn’t about broken technology—it’s about broken implementation: Organizations automate flawed processes, launch hype-driven POCs without ROI, and fall for agent washing from vendors rebranding chatbots as autonomous agents.

- The pilot-to-production gap is widening, not closing: Pilots doubled from 37% to 65% in one quarter, but production deployment remains stuck at 11%—organizations treat pilots as experiments instead of building for production from day one.

- Only 130 of thousands of agentic AI vendors are legitimate: Use the four-question framework to identify agent washing: Does it take initiative? Can it handle multi-step workflows? Does it learn and improve? Can it integrate across systems?

- Success stories prove the technology delivers massive value when implemented correctly: Walmart achieved 22% e-commerce sales increase, banks see 200-2,000% productivity gains, and companies report 30% cost reduction with 50% faster processing times.

- 77% of organizations aren’t strategically ready: With 42% still developing strategy and 35% having no strategy at all, most organizations need to fix processes, define clear ROI, plan governance, and address organizational resistance before rushing into deployment.

Source: Gartner, Computerworld