You think AI coding tools make you 20% faster. A rigorous 2025 study by METR found you’re actually 19% slower—and even after experiencing the slowdown, developers still believed they were faster. The gap between perception and reality isn’t subtle: it’s a 39-43 percentage point mismeasurement that threatens the $30.1 billion AI coding market projected for 2032.

This paradox is built on cognitive biases, “almost right but not quite” code consuming 66% of developers’ debugging time, and a trust crisis that collapsed from 42% to 33% in just one year. With 85% developer adoption but trust falling 9 percentage points year-over-year, organizations making AI tool decisions based on developer sentiment alone may be systematically over-investing in tools that slow their teams down.

The Numbers Don’t Lie: 19% Slower Despite Feeling Faster

METR’s July 2025 randomized controlled trial recruited 16 experienced developers from large open-source repositories—projects averaging 22,000+ stars and over 1 million lines of code. These weren’t beginners tinkering with tutorials. The study assigned 246 real tasks from their own mature codebases (averaging 10 years old), randomly granting access to AI tools like Cursor Pro with Claude 3.5/3.7 Sonnet or requiring developers to work without assistance.

The results contradict the productivity narrative. Developers using AI tools took 19% longer to complete tasks compared to working without AI. Before starting, these same developers predicted AI would make them 24% faster. The perception gap doesn’t stop there—even after finishing tasks and experiencing the actual slowdown measured by screen recordings and time logs, participants still believed AI had improved their productivity by 20%.

That’s a 39-43 percentage point gap between what developers feel and what objective measurements show. Developers accepted less than 44% of AI-generated code, meaning more than half of their time spent interacting with AI tools was wasted on suggestions they ultimately rejected. In complex, mature codebases, AI doesn’t just fail to deliver promised speedups—it creates measurable drag.

The “Almost Right” Trap

Stack Overflow’s 2025 survey of over 90,000 developers identified the mechanism behind the slowdown. The top AI frustration isn’t that tools produce garbage code—it’s that 66% of developers cite code that’s “almost right but not quite.” AI generates plausible-looking code that passes syntax checks, feels correct at first glance, yet contains subtle logic bugs that only surface in edge cases or production.

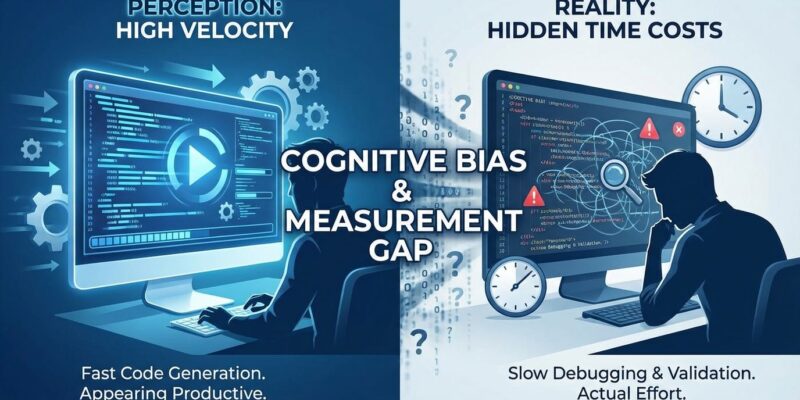

This is where the productivity paradox becomes concrete. AI generates code quickly, creating immediate visible progress. However, developers spend significantly more time checking if AI output is correct (not just plausible), debugging subtle bugs that pass initial review, re-prompting when suggestions are wrong, and fixing regressions introduced by plausible-but-incorrect code. The fast generation phase feels productive. The slow validation phase feels like normal debugging—so developers don’t attribute it to the AI tool itself.

The trust collapse reflects developers experiencing this gap. Trust in AI accuracy fell from 42% in 2024 to 33% in 2025—a 9-point drop in a single year. Furthermore, 46% of developers now actively distrust AI tools, exceeding the 33% who trust them. Positive sentiment declined from 70%+ (2023-2024) to just 60% (2025). Experienced developers are the most skeptical, with only 2.6% reporting “high trust” and 20% expressing “high distrust.”

Your Brain is Lying About Productivity

Three cognitive biases conspire to create the perception gap. First, Visible Activity Bias: watching code appear on screen feels productive, creating a psychological reward even when total time increases. Second, Attribution Bias: developers credit AI for successful code generation while blaming “edge cases,” “unclear requirements,” or “complex logic” when they spend extra time debugging. Third, Sunk Cost Fallacy: after investing in AI tools, training, and workflow changes, developers rationalize the investment by convincing themselves the tools deliver value.

The moderate time savings from faster code generation is consistently overcome by validation overhead—prompting the AI, waiting for generations, reviewing output, testing for correctness, and debugging subtle issues. Developers type less, which creates the subjective feeling of productivity. Total time increases, but the psychological accounting tricks brains into believing they’re faster.

This explains why organizations see no velocity improvement at the company level despite individual developers insisting they’re more productive. Subjective self-reporting becomes fundamentally unreliable when cognitive biases systematically distort perception. Companies measuring AI tool ROI through developer surveys are building decisions on feelings, not facts.

Seniors Ship 2.5x More AI Code—Still Hit the Wall

Experience doesn’t eliminate the paradox—it just shifts the bottleneck. Senior developers (10+ years experience) ship 2.5 times more AI-generated code than juniors, with 33% reporting over half their shipped code is AI-assisted compared to 13% of juniors. Seniors feel more productive too: 59% report feeling faster versus 49% of juniors. They’re better at writing effective prompts, catching errors, and using AI strategically for appropriate tasks.

However, seniors hit different walls. About 30% report editing AI output heavily enough to offset most time savings—they generate code quickly but spend the gains on validation and correction. Meanwhile, AI adoption by less experienced developers creates a code review crisis. Teams see 98% more pull requests merged, but PR review times balloon by 91%. The bottleneck shifts from writing code to validating it, and senior developers drown in reviewing AI-generated output from colleagues who may not catch subtle bugs.

The quality impact is measurable. Uplevel Data Labs found Copilot users introduced 9.4% more bugs compared to developers working without AI. Companies with high AI adoption rates don’t ship faster or more reliably at the organizational level—individual velocity gains (when they exist) are consumed by review overhead, debugging, and quality control. The paradox persists: everyone feels faster, organizational velocity stays flat.

The $30B AI Coding Market Built on Perception

The AI code generation market is projected to grow from $4.91 billion in 2024 to $30.1 billion by 2032, fueled by productivity promises that rigorous independent research fails to validate. Departmental coding AI spending hit $4 billion in 2025—representing 55% of all departmental AI spend—and major investments continue. Anysphere (makers of Cursor) raised $900 million at a $9 billion valuation in May 2025, betting that perceived productivity gains will translate to sustained adoption.

The market faces a sustainability problem. Adoption is high (85% of developers use AI tools regularly, 51% daily), but trust is collapsing. The gap between vendor-sponsored research and independent studies is stark. GitHub’s randomized controlled trial showed 55.8% faster task completion on a simple HTTP server benchmark. METR’s independent study on experienced developers working in complex, real-world codebases showed 19% slowdowns. Context matters—AI effectiveness on synthetic benchmarks doesn’t predict performance on mature, million-line repositories.

Can a $30 billion market sustain when its foundational promise—productivity gains—is built on perception rather than measurement? Trust falling from 42% to 33% in one year suggests developers are experiencing the gap between marketing hype and their daily reality. If independent research continues showing slowdowns while vendors claim transformative speedups, the disconnect will force a market correction. The industry needs to move from “faster code generation” to “faster overall development”—which means addressing the validation overhead, not just generation speed.

Measure Reality, Not Feelings

The AI coding productivity paradox reveals that subjective feelings about productivity can diverge dramatically from objective outcomes. The implications extend beyond individual developers to organizational strategy and a multi-billion dollar market built on unvalidated promises. What can developers and engineering leaders do?

- Don’t trust feelings—measure actual outcomes. Track task completion time (wall clock, not estimates), bug rates, PR review time, and code acceptance rates. Subjective surveys systematically overstate AI tool value by 39-43 percentage points.

- Use AI selectively, not universally. AI tools deliver genuine value for routine tasks (30-60% time savings on boilerplate, test generation, documentation). They create overhead for complex logic in mature codebases. Match tool usage to task characteristics.

- Expect low acceptance rates. Developers keep less than 44% of AI-generated code. Factor the wasted time into ROI calculations. If you’re spending more than 5 minutes fixing AI output, just write it yourself.

- Scale review capacity as AI adoption increases. Teams adopting AI see PR review times increase by 91%. The bottleneck shifts from writing code to validating it. Organizational velocity doesn’t improve unless you address the review constraint.

- Question vendor-sponsored research. Studies showing 55% speedups use simple synthetic tasks. Independent research on complex, real-world codebases shows 19% slowdowns. Effectiveness depends on context—codebase size, maturity, complexity, and developer experience all matter.

The paradox won’t disappear by ignoring it. As the $30 billion market matures, tools will need to address the validation overhead that makes developers slower despite feeling faster. Until then, trust your measurements, not your gut.