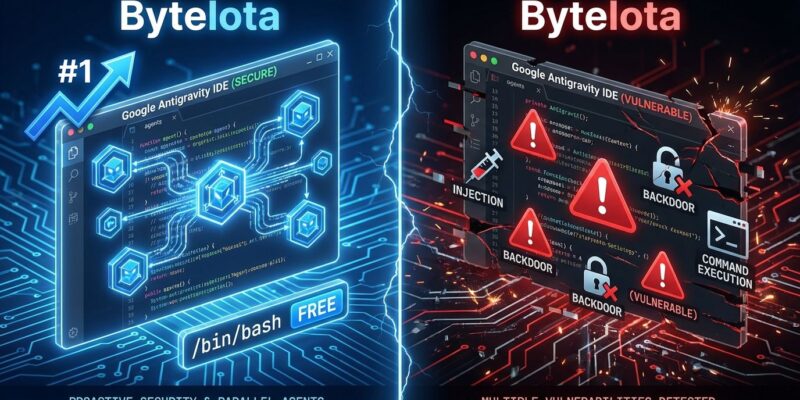

Google launched Antigravity in November 2025, and by December it claimed the top spot in LogRocket’s AI developer tool rankings—beating Cursor and Windsurf. The catch? Security researchers discovered five critical vulnerabilities within 24 hours of launch, including data exfiltration and persistent backdoors. The question isn’t whether Google Antigravity is innovative. It’s whether Google’s free model is worth the security trade-offs.

The Free Disruption

Antigravity is completely free during public preview. Not freemium. Full access at zero cost.

That puts it against Cursor ($20-$200/month) and Windsurf ($15-$30/month). When LogRocket evaluated AI developer tools in December using technical performance, usability, value proposition, and accessibility, Antigravity ranked first. The free pricing disrupted a market already worth over $2 billion and projected to hit $24 billion by 2030.

But is this sustainable? The AI coding tools market is exploding—50-65% of developers now use these tools daily. Companies like Cursor’s maker Anysphere crossed $100M in ARR in record time. Google sees the same opportunity. So why give it away free?

Either this is a land-grab before revealing enterprise pricing through Vertex AI, or it’s data collection disguised as developer tooling. When the product is free, you’re often the product.

Multi-Agent Orchestration Changes the Game

Strip away pricing, and Antigravity still represents a technical leap. It’s the only AI IDE with true multi-agent architecture.

Cursor, Windsurf, GitHub Copilot give you one agent at a time. Antigravity’s Manager Surface lets you spawn and orchestrate multiple agents working asynchronously across workspaces. Dispatch five agents to tackle five bugs simultaneously. Your throughput multiplies.

Google’s demo proves the point: “Build a flight tracker with real-time updates” produced 300+ lines of production-ready React and Node.js in 28 minutes versus 6+ hours traditionally. A fintech company reportedly migrated 50,000 lines of Python 2.7 to Python 3.12. Developers with minimal experience built Android apps in under 30 minutes.

This isn’t incremental. Multi-agent orchestration reimagines developer-AI interaction—managing a team of agents rather than babysitting one assistant. That’s the future of agentic coding, assuming security issues don’t kill it first.

Artifacts Solve the Trust Problem

When an AI agent claims “I fixed the bug,” how do you verify without reading every line? Most tools dump logs. Antigravity generates Artifacts.

Artifacts are human-readable outputs for verification at a glance: task lists, implementation plans, screenshots, browser recordings. Before writing code, the agent generates a structured plan. After UI changes, it captures before-and-after screenshots. For dynamic interactions, it records videos.

This addresses a real problem. AI coding tools have 84% adoption but only 33% confidence. Developers use these tools but don’t fully trust them. Artifacts provide the missing verification layer.

The irony? Antigravity solves AI code trust while creating new security trust problems.

Security Holes You Could Drive a Truck Through

Within 24 hours of launch, researchers identified five critical vulnerabilities: data exfiltration via indirect prompt injection, persistent backdoors surviving complete uninstall, and remote command execution.

Google’s response? These behaviors are “intended.” The Bug Hunters platform lists “agent has access to files” and “permission to execute commands” as invalid reports. Translation: Working as designed.

Here’s why that’s alarming. Antigravity ships with “Agent Decides” as default, removing humans from the loop. “Terminal Command Auto Execution” allows agents to run system commands without confirmation. Researchers call this the “lethal trifecta”: simultaneous access to untrusted input (web), private data (codebase), and external communication (internet). When all three exist, data exfiltration becomes almost inevitable.

One researcher demonstrated a malicious “trusted workspace” embedding a persistent backdoor that triggers on any future launch, even after uninstall and reinstall. These vulnerabilities were inherited from Windsurf and known since at least May 2025.

The assessment: “Promising but proceed carefully.” Not ready for production with sensitive data. Regulated industries should wait.

This isn’t a beta bug. It’s a design choice prioritizing speed over security. For a tool accessing your codebase, running terminal commands, and connecting to the internet, that’s reckless.

Performance Justifies the Hype, Security Doesn’t

Antigravity scored 76.2% on SWE-bench Verified, just 1% behind Claude Sonnet 4.5’s 77.2%. That’s frontier-level performance. It supports multiple models—Gemini 3 Pro/Flash/Deep Think, Claude Sonnet 4.5/Opus 4.5, and GPT-4o—reducing vendor lock-in.

The 2 million token context window handles large codebases. The dual-interface design provides flexibility. Developers report “mental freedom” and describe it as “a co-engineer, not a text generator.”

The innovation is real. Multi-agent orchestration, Artifacts for trust, competitive performance, and zero cost create compelling value. But innovation doesn’t excuse security negligence. Google rushed this launch. Vulnerabilities discovered within 24 hours prove it. Calling them “intended” suggests a company valuing market disruption over developer safety.

The Verdict

Antigravity represents a technical leap in agentic coding. Multi-agent orchestration changes how developers work with AI. Artifacts solve trust verification. Free pricing disrupts a market where competitors charge hundreds monthly.

But security issues are disqualifying for anything beyond experimentation. Data exfiltration, persistent backdoors, and Google’s “intended” vulnerability stance mean Antigravity isn’t production-ready. Use it for side projects and learning. Don’t point it at proprietary codebases until Google fixes the security model.

The biggest question remains: What’s Google’s endgame? Free tools accessing your codebase, executing commands, and connecting to the internet aren’t charity. Whether this bet pays off depends on what Google does next—and whether developers trade security for innovation.

For now: Great idea, terrible execution. Come back when the security isn’t “intended.”