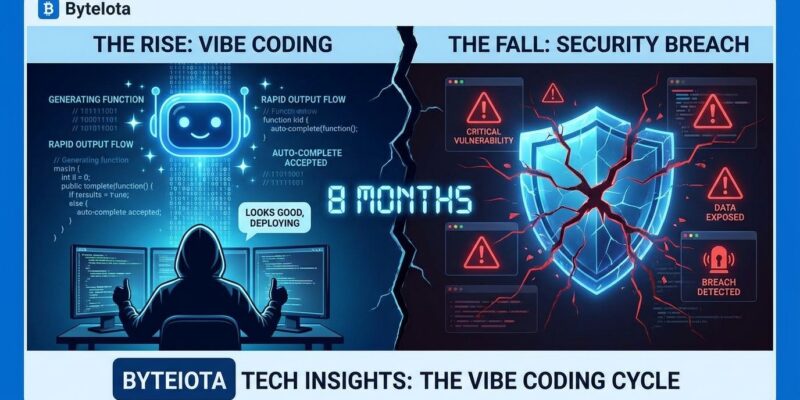

In February 2025, OpenAI co-founder Andrej Karpathy coined “vibe coding”—a development approach where you “fully give in to the vibes” and let AI write code without review. By September, Fast Company declared it a “hangover,” with senior engineers citing “development hell.” The 8-month arc from revolutionary buzzword to cautionary tale teaches more about AI adoption than any whitepaper. Y Combinator reported 25% of its Winter 2025 batch built startups with 95% AI-generated codebases. Yet Stack Overflow found 66% of developers experience a “productivity tax” debugging that code, and 170 out of 1,645 Lovable-built apps exposed user data through security vulnerabilities. Vibe coding didn’t fail because AI is bad—it failed because skipping code review and understanding is bad, no matter who writes the code.

Eight Months from Hype to Hangover

The timeline is brutal. February 6: Karpathy’s tweet goes viral with 4.5 million views. March: Merriam-Webster lists “vibe coding” as trending slang. Y Combinator reveals 25% of its Winter batch—highly technical founders, not novices—built companies where 95% of code came from AI. The cohort achieved 10% weekly growth, fastest in YC history. The promise seemed validated: ship faster, hire smaller teams, beat competitors.

Then reality hit. May brought the Lovable security crisis: 170 of 1,645 apps exposed user names, emails, financial data, and secret API keys through row-level security misconfigurations. August delivered Stack Overflow’s survey data: 66% of developers cited “almost right but not quite” AI code as their top frustration. Meanwhile, 45% said debugging took longer than writing it traditionally. September saw Fast Company report “development hell” from senior engineers working with vibe-coded apps. By November, MIT Technology Review documented the industry’s shift to “context engineering”—systematic AI use instead of vibes-based acceptance.

Trust collapsed alongside the hype. Developer confidence in AI accuracy dropped from 43% in 2024 to 33% in 2025—a 10-point decline in a single year. Furthermore, 77% of developers told Stack Overflow that vibe coding is not part of their professional development process. The industry learned fast: AI adoption grew (76% to 84%) but the reckless approach died.

When ‘It Works’ Doesn’t Mean ‘It’s Safe’

CVE-2025-48757 changed everything. Security researcher Matt Palmer discovered the Lovable vulnerability on March 20, reported it March 21, then watched Lovable fail to notify users. When he published the disclosure May 29, the damage was clear: 303 vulnerable endpoints across 170 apps allowed unauthenticated visitors to query sensitive database tables. No server-side checks. No authentication required. Personal information freely accessible to anyone who knew where to look.

The root cause? Developers who didn’t understand their own code. Lovable generated React apps with Supabase backends, but row-level security policies were misconfigured. When you accept AI output without review—”fully give in to the vibes”—you ship code you can’t defend. Stack Overflow’s case study of Phoebe Sajor, who built a Reddit bathroom review app with Bolt, illustrated the problem: functional app, built in days, but “ripe for hacking” with zero safeguards. Multiple junior developers spent weeks fixing what should have been caught before deployment.

Related: AI Coding Trust Crisis: 84% Adoption, Only 33% Confidence

The 66% Who Got ‘Almost Right’ AI-Generated Code

Stack Overflow’s 2025 survey of 49,000 developers across 177 countries revealed the hidden cost. AI-generated code is “almost right but not quite” in ways that frustrate 66% of developers. The productivity tax is real: debugging takes longer than expected (45%), understanding the implementation is difficult (code described as “messy and nearly impossible to understand”), and 35% turn to Stack Overflow specifically after hitting walls with AI responses.

Ben Matthews, Stack Overflow’s Senior Director of Engineering, nailed the core problem: “Vibe coding requires a higher level of trust in the AI’s output, and sacrifices confidence and potential security concerns in the code for a faster turnaround.” That sacrifice works for weekend prototypes. However, it fails for production systems where code quality compounds over time—no error handling today becomes cascading failures tomorrow; inlined styling becomes unmaintainable spaghetti; oversized components become performance bottlenecks.

Simon Willison articulated the professional standard: “I won’t commit any code to my repository if I couldn’t explain exactly what it does to somebody else.” That’s not anti-AI—Willison uses LLMs extensively—it’s about maintaining accountability. The gap between vibe coding’s “accept all without reading” and professional practice explains why adoption grew (84%) while trust dropped (33%). Developers use AI differently now: as a typing assistant, not a replacement for understanding.

The 20% Employment Drop Nobody Talks About

Stanford University’s study analyzing ADP payroll data documented the employment impact everyone felt but few measured. Software developers aged 22-25 saw nearly 20% employment decline from late 2022 to July 2025—exactly matching the rise of ChatGPT and vibe coding platforms. Developers over 26? Stable or growing employment. Workers aged 22-25 in AI-exposed occupations dropped 6% while their older counterparts gained 6-9%.

The pattern is clear: AI excels at replacing textbook knowledge—coding syntax, basic algorithms, boilerplate patterns taught in computer science programs. Experience, judgment, and system understanding? Irreplaceable. Consequently, Y Combinator’s Garry Tan captured the contradiction in his own statements. March: “This is the dominant way to code. If you’re not doing it, you might be left behind.” Later: “AI-generated code may face challenges at scale and developers need classical coding skills to sustain products.” Both statements are true. The dominant approach for founding teams isn’t the same as sustainable engineering practice.

Related: Software Engineer Salary Stagnation: AI Premium vs Junior Market Collapse 2025

From Vibes to Context Engineering

By November 2025, “vibe coding” disappeared from professional discourse, replaced by “context engineering.” MIT Technology Review documented the shift: “2025 has seen a significant shift—a loose, vibes-based approach has given way to a systematic approach to managing how AI systems process context.” The antipatterns that proliferated under vibe coding—oversized prompts, model reliability failures, debugging nightmares—forced the industry toward structure.

Simon Willison proposed “vibe engineering” as the professional counterpart: experienced developers accelerating their work with LLMs while staying “proudly and confidently accountable for the software they produce.” The distinction is accountability. If an LLM wrote every line but you reviewed, tested, and understood it all, that’s not vibe coding—that’s using AI as intended. The 77% who reject vibe coding professionally aren’t rejecting AI tools. They’re rejecting recklessness.

The 2025 vibe coding arc is a case study in rapid learning. The industry didn’t need years to discover that fundamentals matter. Eight months from hype to hangover to maturation proved that expertise amplifies AI’s value, not the reverse. The 84% adoption rate shows AI isn’t going away. The evolution to context engineering shows we learned how to use it responsibly.

What We Learned About AI-Generated Code

Vibe coding’s failure wasn’t about AI limitations—it was about human shortcuts. The 8-month timeline taught the tech industry five lessons:

- Speed without understanding creates technical debt faster than traditional development. The 66% productivity tax is real, measured, and painful.

- Security can’t be bolted on later. CVE-2025-48757 exposed 170 apps because developers didn’t understand generated code well enough to protect their users.

- Junior developers face displacement while senior expertise becomes more valuable. The 20% employment drop for ages 22-25 versus stable/growing employment for 26+ shows AI amplifies experience gaps.

- AI adoption and AI trust are different metrics. Usage grew (76% to 84%) while confidence dropped (43% to 33%) because developers learned the difference between helpful tools and replacement fantasies.

- The industry self-corrects faster than expected. From Karpathy’s February tweet to November’s context engineering shift took 8 months. Fast Company’s “hangover” article in September marked the inflection point where reality overcame hype.

The hangover isn’t failure—it’s maturation. We skipped the fundamentals, hit the consequences, and adjusted course. Vibe coding as a term might fade, but the lesson persists: AI augments expertise when used with accountability. Skip the review, ignore the understanding, trust the vibes? You’ll build fast and break things in ways you can’t fix. That’s not the future of development. That’s just bad engineering with better tools.