Two major 2025 developer surveys—JetBrains (24,534 developers, 194 countries) and Stack Overflow (49,000+ developers)—reveal a productivity crisis hiding in plain sight: 66% of developers don’t believe current metrics reflect their actual contributions. Meanwhile, 85% use AI tools daily, with 1 in 5 saving 8+ hours weekly. Developers are more productive than ever, yet measurement systems say otherwise. This isn’t a metrics problem. It’s a trust crisis.

The 66% Trust Gap: When Metrics Lie

The JetBrains 2025 State of Developer Ecosystem report drops a damning stat: two-thirds of developers don’t believe—or aren’t sure—that productivity metrics reflect their real work. This isn’t a measurement quirk. It’s organizational failure at scale.

What developers actually want, according to the research, is transparency about team processes, communication quality, tool satisfaction, and well-being. Instead, most get lines of code counts, commit frequency tracking, and velocity targets. The disconnect is staggering.

Trust isn’t optional in engineering culture. Furthermore, when developers don’t believe metrics reflect their contributions, they either game the system (inflate story point estimates, split commits artificially) or mentally check out. Consequently, the 66% distrust rate isn’t just a number—it’s turnover risk, engagement death, and the slow collapse of team cohesion.

AI Changes Everything. Metrics Notice Nothing.

Here’s the paradox: 85% of developers use AI tools regularly, 62% rely on AI assistants daily, and nearly 9 out of 10 save at least 1 hour weekly. Moreover, one in five saves 8+ hours every week. That’s transformative productivity.

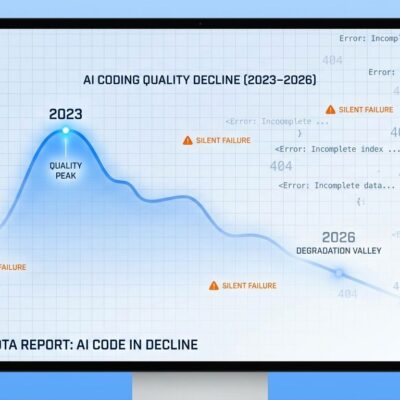

Yet traditional output metrics—lines of code, commit counts, pull request volumes—treat AI-augmented development like manual typing. Worse, positive sentiment toward AI tools dropped from 70%+ in 2023-2024 to just 60% in 2025. Developers experience AI’s limitations (hallucinations, context failures, review overhead) while organizations chase misleading output spikes.

The developer role has fundamentally shifted. AI handles boilerplate and repetitive tasks. In contrast, humans solve complex problems, conduct thoughtful code reviews, make architectural decisions, reduce technical debt. Traditional metrics measure the first part (code volume) and ignore the second (value delivered). In the AI era, measuring productivity by code output is like measuring aircraft manufacturing progress by weight.

Human Factors Beat Technical Factors

The data reveals a fundamental rebalancing: 89% of developers say non-technical factors—collaboration, communication, goal clarity, peer support, feedback—influence productivity. However, only 84% cite technical factors like tool performance and CI/CD reliability.

Read that again. Collaboration matters more than tooling.

Yet most organizations obsess over infrastructure metrics (deployment frequency, build times) while ignoring the human systems that drive those numbers. Furthermore, the investment gap is stark: technical managers want 2× more focus on addressing communication issues and nearly 2× greater investment in reducing technical debt. Nevertheless, companies aren’t listening.

This validates what developers have been saying for years: productivity isn’t about typing faster or deploying more often. Indeed, it’s about working together effectively, understanding goals, having the tools to do the job, and feeling supported. The 66% distrust stat is the direct result of measuring only half the equation.

Why Traditional Metrics Fail (And Harm Teams)

Lines of code, velocity, story points, commit frequency—these developer productivity metrics don’t just fail to capture productivity. They actively destroy it.

Lines of Code (LOC) penalizes refactoring. Simplifying 200 lines into 50 elegant, maintainable lines “reduces productivity” by 75%. Moreover, it incentivizes verbose, bloated code and ignores language differences (Python vs Java for the same task). Developer experience platform DX puts it bluntly: LOC “suggests companies view their engineers as typers, rather than engineers.”

Velocity and Story Points are even worse. They cannot be compared across teams (each team defines points differently—Team A’s 50 points ≠ Team B’s 50 points). They’re trivially gamed through estimate inflation. Furthermore, when managers set velocity targets, the metric ceases to be an empirical observation and becomes a performance trap. Scrum.org is unequivocal: “Story points are not the problem, velocity is.”

Commit Frequency rewards splitting work artificially, penalizes thoughtful problem-solving, and completely ignores code review contributions, mentorship, and architectural discussions—the work that actually delivers value.

The pattern is clear: output-focused metrics measure activity, not outcomes. Consequently, they optimize for the wrong things, can be gamed effortlessly, and—as 66% of developers will tell you—don’t reflect reality.

Better Frameworks Actually Exist

The industry doesn’t need to invent new productivity measurement. Two battle-tested frameworks already work: DORA and SPACE.

DORA (DevOps Research & Assessment) from Google Cloud measures outcomes through four metrics: Deployment Frequency, Lead Time for Changes, Change Failure Rate, and Mean Time to Recovery. It balances speed (how fast you ship) with stability (how often you break things). Furthermore, teams are classified as Low, Medium, High, or Elite performers. Critically, DORA is harder to game than LOC or velocity—you can’t fake deployment frequency without actually deploying.

SPACE (Satisfaction, Performance, Activity, Communication, Efficiency) from Microsoft Research and GitHub recognizes Nicole Forsgren’s core insight: “Productivity cannot be reduced to a single dimension or metric.” The framework tracks five dimensions and recommends monitoring at least three for a holistic view. Importantly, it balances quantitative metrics (DORA, commits) with qualitative measures (satisfaction surveys, collaboration quality).

Research confirms what developers already know: satisfied developers are measurably more productive. Quarterly satisfaction surveys—covering tool effectiveness, team processes, well-being—are leading indicators of performance. Fix developer experience, and DORA metrics improve. Conversely, ignore experience, and no amount of CI/CD optimization will save you.

What Developers and Managers Should Do

If you’re a developer being measured by broken metrics, ask for transparency. “How are velocity numbers used—sprint planning or performance reviews?” Push for holistic measurement: DORA metrics, satisfaction surveys, collaboration quality. Additionally, document non-code contributions: architecture decisions, mentorship, code reviews, technical debt reduction. Use AI productivity gains as evidence for outcome-based measurement.

If you’re an engineering manager, never set velocity or story point targets. Use them for sprint planning only. Implement DORA metrics (most CI/CD tools—GitLab, GitHub, CircleCI—provide them automatically). Moreover, run quarterly developer satisfaction surveys. Explain why you’re tracking metrics: “We measure this to improve systems, not evaluate individuals.” And invest 2× more in communication improvements and tech debt reduction. Your team is literally begging for it in survey data.

For organizations, the fix starts with culture: outcomes over output, quality over quantity. Create dedicated developer productivity engineering roles instead of burdening delivery-focused teams with measurement responsibility. Furthermore, adopt the SPACE framework, tracking at least three dimensions. Stop comparing team velocities. Stop measuring lines of code. Instead, start measuring what actually matters: shipping value, maintaining stability, and keeping developers engaged.

The 66% distrust rate isn’t inevitable. It’s a choice to cling to broken metrics instead of adopting frameworks that work.