Five days ago, Anthropic made a move that could reshape AI development: donating the Model Context Protocol (MCP) to the Linux Foundation’s newly formed Agentic AI Foundation. Co-founded by Anthropic, Block, and OpenAI—with backing from Google, Microsoft, AWS, Cloudflare, and Bloomberg—this isn’t just corporate posturing. MCP already powers 10,000+ deployed servers with 97M+ monthly SDK downloads, and this donation ensures it stays vendor-neutral as it becomes critical AI infrastructure.

The Integration Problem MCP Solves

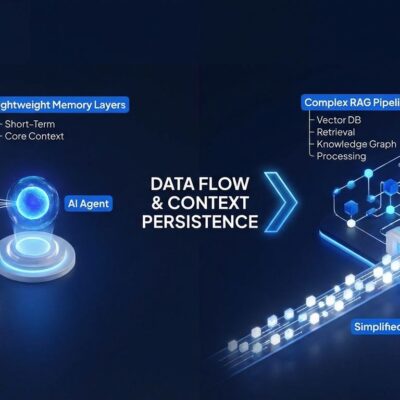

Here’s the pain point every AI developer knows: your model is only as useful as the data it can access. But connecting AI systems to external tools means building custom integrations for each combination—the classic n×m problem. Want your AI to work with GitHub and Postgres? Build two integrations. Add Slack? Three. Scale that across ChatGPT, Gemini, and Copilot, and you’re maintaining dozens of separate connections.

MCP flips this model. It’s a universal protocol for AI-to-tool connections—think ONNX, but for agent integrations instead of model formats. Build one MCP server, and it works across ChatGPT (adopted March 2025), Google Gemini (confirmed April 2025), Microsoft Copilot, Cursor, and VS Code. Write once, deploy everywhere. That’s not a future promise; ChatGPT, Gemini, and Copilot have already shipped MCP support, with 97M+ monthly SDK downloads proving developers are building on it now.

The architecture is straightforward: MCP servers expose data and functionality, MCP clients (your AI apps) connect via JSON-RPC 2.0, and OAuth 2.1 handles security. Anthropic provides pre-built servers for Google Drive, Slack, GitHub, Postgres, Puppeteer, and Stripe, with 75+ connectors in Claude’s directory and a growing ecosystem of 5,800+ community-built servers.

Why Linux Foundation Governance Changes the Game

Protocols only matter if they last. As GitHub’s Martin Woodward put it: “A protocol is only as strong as its longevity. Linux Foundation’s backing reduces risk for teams adopting MCP.”

Before December 9, MCP was Anthropic-controlled. That’s fine for experimentation, but risky for production bets. What if Anthropic pivots? What if they add a commercial licensing layer? What if Google or Microsoft fork it into competing standards?

The Agentic AI Foundation (AAIF) eliminates that risk. As a directed fund under the Linux Foundation—the same organization stewarding Kubernetes, Node.js, and PyTorch—MCP now has vendor-neutral governance. The foundation’s principles spell it out: transparent decision-making, no vendor lock-in, project inclusion based on quality (not which company writes the biggest check), and governance that “moves at the speed of AI.”

Three projects anchor AAIF: MCP (Anthropic), goose (Block’s local-first AI agent framework), and AGENTS.md (OpenAI’s universal project guidance standard). Eight platinum members—Anthropic, Block, OpenAI, Google, Microsoft, AWS, Cloudflare, and Bloomberg—plus 18 gold and 23 silver members fund neutral infrastructure and community-driven evolution.

The takeaway for developers: it’s now safe to commit engineering resources to MCP. No single vendor can kill it, fork it, or turn it proprietary.

Adoption is Already Massive

This isn’t a bet on the future. MCP is production-ready and widely deployed:

- 10,000+ active public MCP servers

- 97M+ monthly SDK downloads (Python and TypeScript)

- 37,000 GitHub stars in eight months

- ChatGPT, Gemini, Copilot, Cursor, VS Code integration

- Fortune 500 enterprises in production

- Server downloads jumped from 100K (November 2024) to 8M (April 2025)

Microsoft is going all-in: every new ERP agent uses MCP, with existing agents migrating by December 2025. Azure’s AI Agent Service shipped with MCP built-in back in May. Block and Apollo integrated MCP early and are running it in production. The market backs this momentum—projections show MCP’s ecosystem growing from $1.2 billion in 2022 to $4.5 billion by end of 2025, with 90% of organizations expected to adopt it.

For a real-world example, consider Codeium Cascade. Developers can point it at custom MCP servers—like the Google Maps server—and ask questions like “Find the distance between the office and the airport.” The AI calls the Maps API directly via MCP, no custom code required.

What Developers Should Do Now

If you’re building AI tools, here’s your playbook:

Start experimenting: Grab the pre-built servers (GitHub, Postgres, Slack) and test them with Claude Desktop. Browse the 75+ connectors in Claude’s directory. Build a simple MCP server using the Python or TypeScript SDKs.

Plan your migration: If you’re maintaining custom AI integrations, map a transition path to MCP. The November 25, 2025 spec update added enterprise-ready features—OAuth 2.1 for any identity provider, rate limiting, token expiration policies, and long-running task APIs for multi-minute workflows.

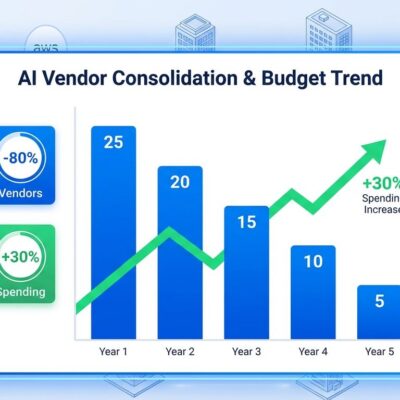

Budget accordingly: Enterprise deployments aren’t free. Basic MCP infrastructure runs $100K-$500K (setup, security, essential integrations), while full-scale deployments with multi-region support and advanced security hit $1M-$2M. Operational costs run 30-50% higher than traditional AI hosting due to protocol overhead and enhanced security requirements. But you’re paying for interoperability and future-proofing.

Join the community: MCP’s GitHub organization is active, the protocol evolves based on real-world feedback, and the vendor-neutral governance means your voice matters.

Why This Moment Matters

Competing AI vendors rarely align on standards. When they do—like ONNX for model formats or Kubernetes for container orchestration—it signals a market shift from experimentation to production. OpenAI, Google, Microsoft, and Anthropic backing the same protocol through a neutral foundation isn’t hype. It’s the AI agent era moving from “maybe someday” to “build it now.”

The Agentic AI Foundation’s mission says it plainly: “Ensure agentic AI evolves transparently, collaboratively, and in the public interest.” That’s not marketing speak. It’s a commitment to open governance at a moment when AI tooling could easily fragment into vendor-controlled silos.

MCP is becoming critical infrastructure. The Linux Foundation donation makes it safe to build on. If you’re developing AI agents, integrating AI into existing systems, or just watching where the industry is heading, MCP is worth your attention—and probably your time.